Algocracy Newsletter #3

No easter eggs, just interesting stuff.

In this newsletter, we aggregate for you the most interesting online content recently published in the domain of Artificial Intelligence, algorithms and their fairness, privacy and other topics, which are important for the emerging world of algocracy - world governed by algorithms.

If this is your first time seeing this, then please, Read our manifesto and subscribe, if you find it interesting.

Visualization of the week: The Anatomy of AI

by Josef Holy

Breathtaking visualization (by Kate Crawford and Vladan Joler) of a COMPLETE anatomy of Amazon Alexa.

It’s staggering, when you realize how much mass and energy has to be put into coordinated motion so people can use voice commands to ask for the weather forecast.

Natural resources flowing through the global hyperconnected supply-chains into the production of the physical cylinder itself, large scale distributed server farms, powering the cloud, where “the intelligent service” lives, continuous training done by human labor - contractors and unsuspecting reCAPTCHA users (=all of us) and finally, the Databanks, continuously capturing everything about people and what they do and aggregating it into the ultimate source of value and power.

This is the Standard Oil of our time.

(link)

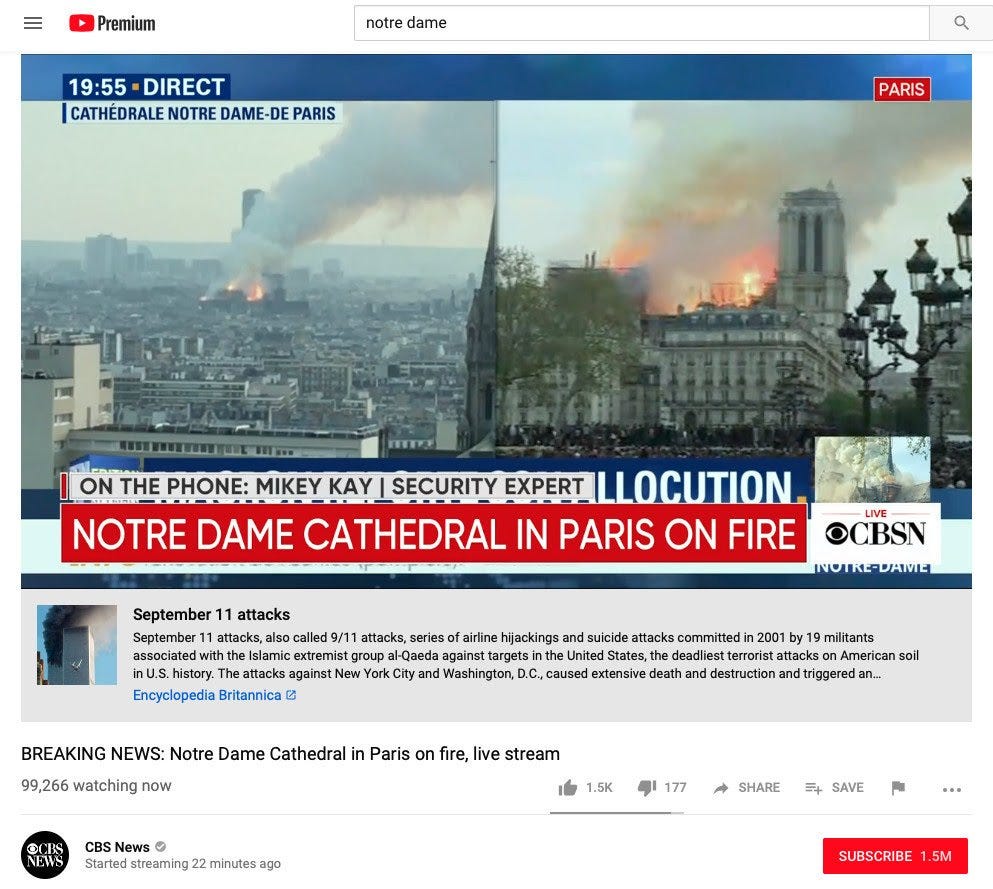

Fail of the Week: “People who like this fire also liked”

by Josef Holy

It is not entirely clear, which similarity clusters led the video platform algorithm to recommend Britannica article about completely unrelated 9/11 attacks in the live feed of the Notre Dame Cathedral fire.

It is clear though, that the path to Singularity and AGI will be long…

Untold History of AI: Algorithmic Bias Was Born in the 1980s

by Josef Holy

Algorithmic Bias is in the center of current discussions around AI Ethics.

One of the first documented cases of the Algorithmic Bias with significant impacts is from 1980s, when St. George’s Hospital Medical School in London started to use an algorithm to screen student applications for admission.

The original intent was to automate the manual task of reviewing thousands of student applications and to make the admission process more transparent.

In 1986, the UK Commission for Racial Equality launched an inquiry, which has found the algorithm was biased against people of color and women.

Key learnings:

Gender and racial discrimination was “normal” in UK universities at that time, but it was implicit - not codified anywhere - and thus not persecuted. It could have been identified by the commission in this case only because it was made explicit in the form of the computer algorithm.

There was a significant cultural aspect - faculty staff members were simply trusting the algorithm too much, taking its scores as “granted”.

The above mentioned cultural aspect is one of the key takeaways from this history lesson - Algorithms (and AI) are just tools, their products (=information) shouldn’t be accepted blindly.

(link)

Notes on AI Bias

by Josef Holy

Algorithmic (AI) Bias is a wide and complex topic, which we will cover in more depth in the future issues of this newsletter.

In the meantime, go and read this very accessible post by Benedict Evans .

The Past Decade and Future of AI’s Impact on Society

Great article about the current state of AI by one of the leading researchers in the field of AI Ethics Joanna J. Bryson. It’s a chapter from the book Towards a New Enlightment? A Transcendent Decade (OpenMind 2019; see book trailer). Academic, long, thorough and overarching. It addresses two main questions:

What have been and will be the impacts of pervasive synthetic intelligence?

How can society regulate the way technology alters our lives?

Here are some of the key points worth mentioning, since they provide a simple, yet powerful conceptual and down-to-earth framework for thinking about AI (and ethics):

Definition of intelligence

Intelligence is the capacity to do the right thing at the right time, in a context where doing nothing (making no change in behavior) would be worse.

By essence, intelligence is a subset of computation, that is the transformation of information, which is a physical process and needs time, space and energy. People many time misunderstand the difference between computation and math. Math doesn’t need space, time and energy, but it is also not real in the same sense. Given the definition of intelligence, AI is presented by Joanna Bryson as “any artifact that extends our own capabilities to perceive and act.

Artificial General Intelligence

The values, motivations, even the aesthetics of an enculturated ape cannot be meaningfully shared with a device that shares nothing of our embodied physical (“phenomenological”) experience.

The very concept of Artificial General Intelligence (AGI) is incoherent. In fact, human intelligence has significant limitations, which is bias and combinatorics. Joanna claims that from evolutionary perspective “biological intelligence is part of its evolutionary niche, and is unlikely to be shared by other biological species…” and hence AGI is a myth, because no amount of (natural or artificial) intelligence will be able to solve all problems. Not even extremely powerful AI can be very human-like, because it embodies entirely different set of motivations and reward functions.

Public Policy and Ethics

Taxing robots and extending human life via AI are ideas with populist appeal. Unfortunately, both are based on ignorance about the nature of intelligence.

Joanna is a strong critic of AI ethics well-known concepts of e-personhood and value alignment. E-personhood, the idea of AI legal persons, would increase inequality in society by shielding companies and wealthy individuals from liability. On the other side, value alignment is the idea that we should ensure that society leads and approves of where science and technology goes. While it sounds well and democratic, it is rather populist. Joanna believes that we do not need a new legal framework for AI governance, but rather optimization of current framework and governmentally empowered expert groups that would shape public policy.

(link)

Other Links

Chinese government uses algorithms (facial recognition and beyond) to track (and effectively geo-fence) members of the Muslim minority (link).

The PR disaster of Mark Zuckerberg and Facebook is getting worse every week. It’s hard to keep track of it, but it now seems clear, that they were actually considering selling data for profit and for competitive advantage. (link).

Are the United States finally jumping on the regulation train? U.S. Congress wants to protect citizens from the bad AI (link).

Will AI end or enhance human art? This article claims the latter, although it looks like proper tools (UIs and metaphors for interacting with AI) are missing, as current art-tinkerers have to do programming and spreadsheets to leverage the power AI. (link).

Thanks for scrolling down here, we hope you’ve found at least some parts interesting. You will hear from us again in about a week.